A Practical Introduction to Quantum Computing

Quantum theory began as a rather esoteric branch of physics, but its influence spread rapidly throughout the physical sciences. More than a century later, it now contributes to computation, communication, and information applications — resulting in the rise of quantum technology as an industrial pursuit. Here, we introduce the concepts behind quantum theory, quantum technology, and quantum computation, and view quantum theory as an extension of probability in order to connect quantum ideas to well-known concepts in probability theory and statistics. This comparison inspires a discussion about quantum theory’s infiltration of the current technological landscape before finally arriving at the notion of quantum computing itself. By examining the major questions within the field of quantum computing, we hope to help direct the influx of industrial and applied mathematicians to the most impactful areas.

Quantum Mechanics

We begin with a probability-first approach to quantum theory. In fact, viewing quantum mechanics as a straightforward extension of probability theory removes much of the surrounding confusion and mystery. In probability theory, a vector of numbers describes the likelihood that a discrete set of events will occur when a random variable is observed or measured. Similarly, the quantum probability density matrix describes the likelihood that certain events will occur when a quantum system is observed or measured. The entries of probability vectors are normalized, real, nonnegative coefficients, while the eigenvalues for the density matrix are also normalized, real, and nonnegative.

The connection between the two types of states is perhaps best explained by example. Consider a probability vector

\[\begin{equation} p =\begin{bmatrix} 0.25 \\ 0.50 \\ 0.15 \\ 0.10 \end{bmatrix} \end{equation}. \tag1\]

The corresponding quantum probability density matrix is then

\[\begin{equation} \rho_p = \begin{bmatrix} 0.25 & & & \\ &0.50 &&\\ &&0.15& \\ &&&0.10 \end{bmatrix} \end{equation}. \tag2\]

We can rotate this matrix to change the basis while still preserving the conditions on the eigenvalues. This relation between the two theories can generalize other concepts (such as entropy) almost directly:

\[H(p)=-\sum_k p_k\log p_k \quad \text{and} \quad H(\rho) = -\operatorname{Tr}[\rho\log\rho].\tag3\]

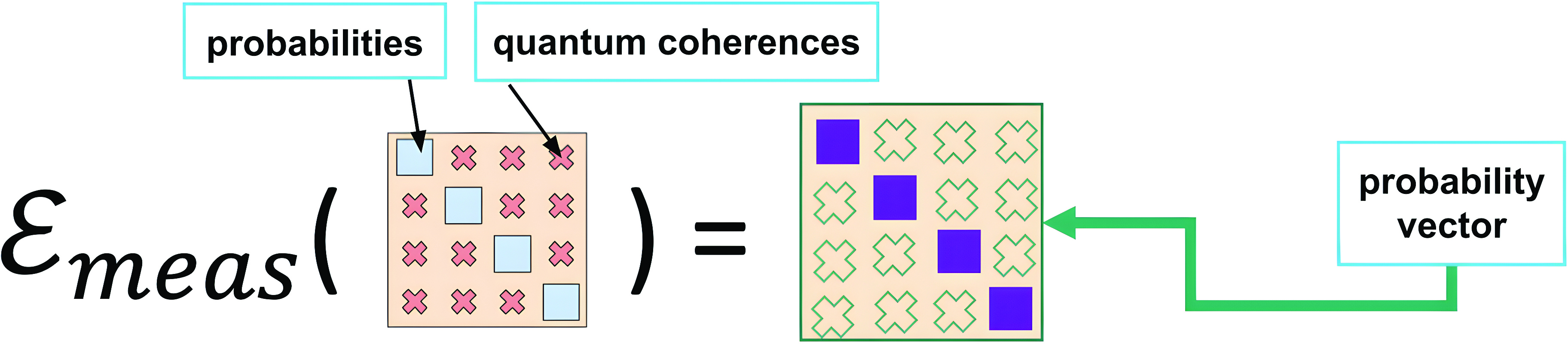

Moreover, the notion of quantum measurement is directly inherited from the underlying probability theory (see Figure 1).

The off-diagonal elements of the density matrix are called coherences (coherence is the fundamental difference between quantum and probability theory). The production of modern technological devices is implicitly based on quantum mechanics, but often in nonobvious ways — in contrast with devices whose operation depends on quantum coherence.

Quantum Technology

Realizing the potential of machine learning technology required significant improvements in networking, memory, data availability, software, and hardware. Quantum computing—which is currently in the nascent stages of industrialization—similarly awaits advancements in theory, implementation, and hardware. Although the full societal impact of quantum technology is not yet actualized, both startup companies and large technology firms have created a variety of early quantum devices. Broadly speaking, there are three key areas of quantum technology: communication, computation, and sensing.

The presence of coherence in the quantum situation expands upon the idea of correlation in probability theory. Quantum correlation—commonly known as entanglement—is a stronger type of correlation with cryptographic implications. For example, if two communicators share entanglement, an eavesdropper will be detected when they perturb the correlations of the secure channel; this type of quantum networking has already seen some commercial deployments.

In quantum computing, coherence and entanglement are used to manipulate information. The construction of such a computing device requires the stabilization, manipulation, and readout of quantum information. These conditions present a difficult engineering problem because isolation from the environment often conflicts with responsive experimental controls. For computational purposes, the quantum system should remain in a state where its quantum probability density matrix has only one non-zero eigenspace. Noise from environmental interactions, control errors, or other stochastic processes will cause the density matrix to spread into additional eigenspaces.

While ideal quantum bits (qubits) should be easy to control yet insensitive to the environment, a nonideal qubit that is very sensitive to its environment can effectively serve as a sensor. Among other applications, quantum sensors can detect the presence of small magnetic fields, specific molecules, and even gravitational waves.

Ultimately, the use of coherence is the defining feature of all forms of quantum technology. This characteristic allows for stronger correlations between systems, faster computations, and increased sensitivity to microscopic changes in the environment.

Quantum Computation

The development of quantum computation arose in two major waves; the first wave consisted of quantum algorithms based on formal methods, and the second wave comprised quantum algorithms that were largely based on optimization methods. Quantum error correction evolved during the initial burst of activity. Because questions of quantum noise were considered only formally and shown to be theoretically surmountable, the first wave of quantum algorithms assumed noiseless quantum device operation (or fully quantum error-corrected systems). Two key algorithms emerged from this era: phase estimation and Grover’s algorithm. Phase estimation, which constitutes the heart of Peter Shor’s factoring algorithm, is largely responsible for driving the early conversation around quantum computing [3].

The availability of noisy intermediate-scale quantum (NISQ) computers inspired the second wave of quantum algorithms, which has yielded quantum tasks that rely on close integration with conventional computing to mitigate noise or extend the range of NISQ devices [2]. The intermediate scale of NISQ computers means that they possess on the order of tens to hundreds of qubits. Decoherence via either unwanted environmental interactions or control errors imposes a noisy functionality on these devices. This lack of control can prevent the full system from being entangled at once — especially as the number of qubits per device increases. Quantum error correction is thus a necessity. Researchers can combine many noisy qubits in an error correcting scheme to form a logical qubit. A nearly continuous effort of reading and resetting physical qubits then yields a logical qubit that can maintain its quantum state indefinitely.

Initial interest in the second wave of quantum algorithms centered on adiabatic annealing. Early expectations that adiabatic annealing would be resilient to noise led to optimism about an early quantum computing company called D-Wave Systems. Due to limitations on the form of the final control Hamiltonian, D-Wave’s superconducting qubit devices are not universal. However, lifting these limitations would equate adiabatic quantum computing with standard quantum computing models. Since D-Wave computers only solve classical problems, users are fundamentally testing the distinction between simulated and quantum annealing for computational advantages.

Universal quantum computers based on superconducting qubit devices, trapped ion devices, and neutral atom devices are all publicly available via the cloud. The outcomes of these machines are statistically sampled from identically prepared quantum density matrices. Before each sample, the same quantum instructions re-prepare the quantum probability density matrix. Researchers must then use the sampled outcome to solve computational problems based on the corresponding probability density matrix.

Since 2019, there have been several high-profile attempts to demonstrate tasks that quantum computers can perform but conventional computers cannot. Flagship experiments have implemented problems such as random circuit sampling and boson sampling on systems with more than 50 qubits. However, no public claims of quantum advantage have withstood scrutiny thus far.

Efforts to reach the elusive quantum advantage are generally thwarted in two key ways. First, advancements in the simulation of large, noisy quantum systems have enabled counterarguments that are usually based on tensor network methods, which efficiently compress and manipulate quantum states with low entanglement. Second, statistical spoofing has allowed conventional computing to keep pace with the results of flagship experiments. Spoofing algorithms do not attempt to find the right answer for the right reason; instead, they are optimized to exploit adversarial loopholes and obtain the highest statistical score on the defined benchmark.

Applied Mathematics and Quantum Algorithms

As theoretical physicist and mathematician Freeman Dyson once said, “Some mathematicians are birds, others are frogs. Birds fly high in the air and survey broad vistas of mathematics out to the far horizon … Frogs live in the mud below ... delight in the details of particular objects, and they solve problems one at a time” [1]. We need both broad landscape views and intimate knowledge to select and solve difficult problems in a variety of areas, especially as we extend the study of probability to the study of quantum mechanics, advance technology based on coherent quantum systems, and continue to explore the ambitions of quantum computing.

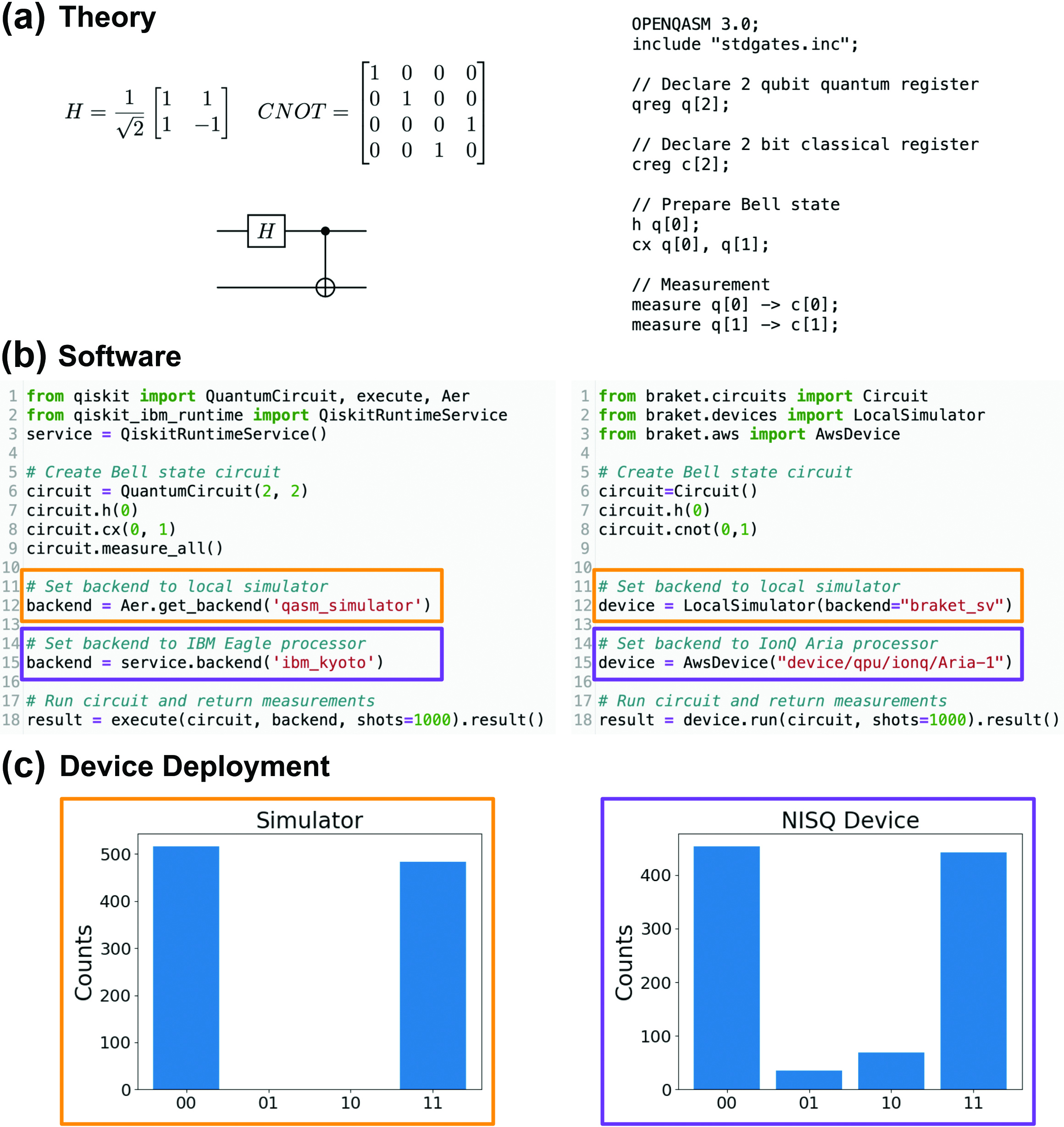

Although quantum theory has existed for an entire century, we still do not fully understand how this extension of probability affects computation and communication (see Figure 2a). As mentioned previously, identifying and demonstrating that a problem is uniquely solvable with quantum technology remains an open concern. This challenge raises questions that pertain to statistical measures, estimation theory, and correlations — all of which appear in applied mathematics. Similarly, the change from probability to quantum deeply affects the fundamentals of cryptography and communication.

The next level is the implementation of theory as software. Numerous software development kits, toolkits, and packages help programmers translate descriptions of algorithms into languages like Julia and Python. Figure 2b provides code snippets from two of the most popular quantum software development kits: Qiskit and Amazon Braket. Many interesting software engineering problems arise at this level of abstraction. For example, certain softwares can now emulate quantum devices via a variety of approaches that range from large matrix-vector multiplication to stochastic sampling methods. Excitingly, current research efforts can move beyond simulation with conventional computers to the utilization of nascent quantum devices.

The subsequent level involves the deployment of today’s quantum technology. Cloud computing companies such as Amazon Web Services offer paid access to existing quantum computing devices. The price, connectivity, quality, and physics of these qubit devices vary widely. Their physical qubits are not perfect, and the final result will exhibit physical noise in addition to statistical noise from the finite sample number (see Figure 2c). For this reason, active areas of research include noise mitigation, efficient statistical sampling, and optimal device-level compilation.

The connections between quantum theory, technology, and computation comprise a fantastic ecosystem for both “birds” and “frogs.” “Birds” help us make new connections between known ideas in mathematics, engineering, machine learning, and other application areas. At the same time, we need “frogs” that can translate our understanding of quantum mechanics to quantum engineers, thus allowing them to continue their pursuit of practical quantum technology.

References

[1] Dyson, F.J. (2009). Birds and frogs. Not. Am. Math. Soc., 56(2), 212-223.

[2] Preskill, J. (2018). Quantum computing in the NISQ era and beyond. Quantum, 2, 79.

[3] Shor, P.W. (1994). Algorithms for quantum computation: Discrete logarithms and factoring. In Proceedings 35th annual symposium on foundations of computer science (pp. 124-134). Santa Fe, NM: Institute of Electrical and Electronics Engineers.

About the Authors

Casey Dowdle

Ph.D. student, Dartmouth College

Casey Dowdle is a Ph.D. student in mathematics at Dartmouth College. His research interests include quantum computing in the context of quantum chemistry, machine learning for many-body physics, and classical dynamics with quantum circuits.

James Whitfield

Associate professor, Dartmouth College

James Whitfield is an associate professor in the Department of Physics and Astronomy at Dartmouth. He holds a Ph.D. in chemical physics from Harvard University. For more than a decade, Whitfield has worked at the intersection of quantum chemistry and quantum computing; he currently explores quantum education in his joint appointment as an Amazon Visiting Academic.