From Sequences of Images to Trajectories: A Tracking Algorithm for Dynamical Systems

In the context of dynamical systems, researchers are often interested in the trajectory of an object or agent under the influence of physical laws. To obtain accurate physical observations, humans can carefully measure the trajectory in question (often frame-by-frame in a video) [10]. Alternatively, one may use edge detection, background subtraction, or a host of other computer algorithms — including machine learning (ML) techniques. MATLAB and Python conveniently house software packages that identify images (such as imfindcircles and OpenCV), which users can employ to automatically track an object frame-by-frame via a simple for loop. Indeed, the success of modern ML and artificial intelligence algorithms in areas such as vision, speech, and language would suggest that object tracking should be a trivial algorithmic task when compared to achievements like ChatGPT. However, the computer vision community’s generic image detection algorithms are often not optimally suited for dynamics problems.

![<strong>Figure 1.</strong> A stark difference in contrast. <strong>1a.</strong> Black ball on a white surface. <strong>1b.</strong> Two droplets on a vibrating fluid bath, with visible reflections of the droplets on the bath. Figure 1a courtesy of [6] and 1b courtesy of [3].](/media/zkkfqddx/figure1.jpg)

Consider a ball that is rolling on a surface. At first glance, the contrast between the ball and surface makes this problem seem like a simple scenario for MATLAB’s Image Processing Toolbox (see Figure 1a). However, significant unexpected difficulties required months of debugging [6]. While computer algorithms aim to reduce human effort, assembling the automation can often require almost as much work as when a human inspects each frame. Moreover, blindly performing the automation without verifying the algorithm’s validity for the specific experiment is even more troubling and can generate erroneous results.

Now consider a more difficult problem wherein the object and background are the same material. In Figure 1b, the object is a walking droplet (walker) and the background is a vibrating fluid bath on which the walker bounces [3]. Background subtraction does not work because it is difficult to determine the background’s exact location, and edge detection also does not work because we do not know where the fluid bath ends and the droplet begins. In this case, an algorithm must identify the droplets and ignore the mirrored images on the bath. For this and many other real-world problems, no simple automation techniques significantly reduce human input.

![<strong>Figure 2.</strong>Sample trajectories of multiple walkers and granular intruders. <strong>2a.</strong> Standard lighting for three droplets. <strong>2b.</strong> Single walker with the lights turned off. <strong>2c.</strong> Single walker with extreme light saturation. <strong>2d.</strong> Four granular intruders. Figure adapted from [8].](/media/e51bhmuv/figure2.jpg)

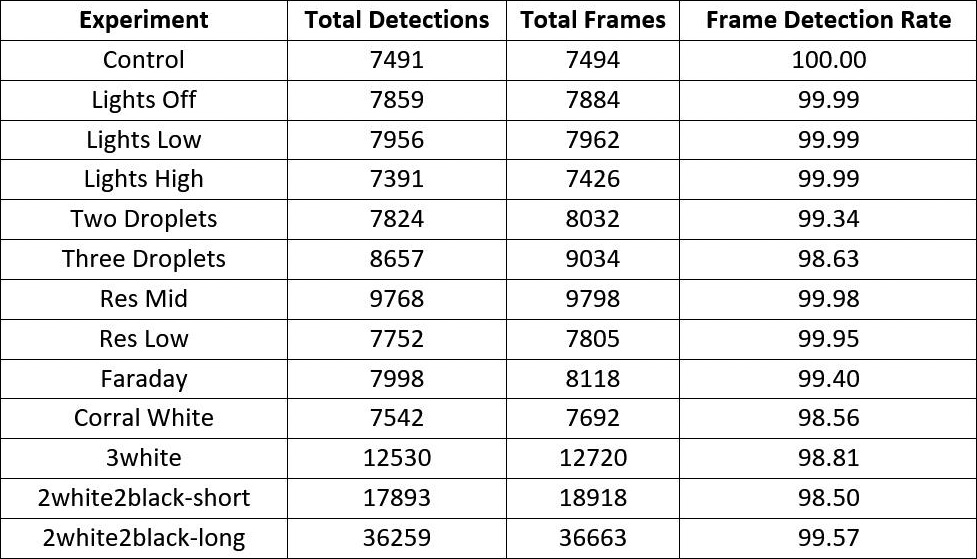

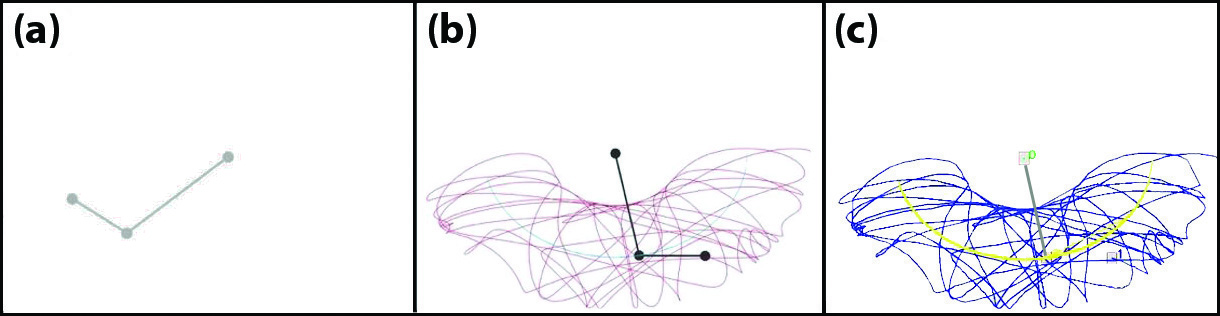

Given these realizations, we developed new object tracking algorithms and tested previous state-of-the-art (SOTA) algorithms for two dynamical problems: (i) Walking droplets and (ii) granular intruders [8]. Like many tracking algorithms, our ML-based approach follows the tracking-by-detection idea. First, we locate the objects of interest (i.e., walkers and intruders) in the frame; these detections are then associated with each other in subsequent frames to help track individual objects. For the detection phase, we utilize YOLOv8 (You Only Look Once version 8): a popular deep-learning-based object detection program. At this stage, the critical observation is to match the number of detections with the actual number of objects in the experiment and impose a high confidence score on each detection. This method eliminates false-positive identifications that may corrupt the tracking efforts. For the sake of interpretability, we employ the simplest identification approach: we calculate the distances between objects in two frames and use the Hungarian algorithm—a combinatorial optimization algorithm that solves an assignment problem in polynomial time—to identify corresponding objects in two subsequent frames. Figure 2 displays some of our tracking algorithm’s captured trajectories [8]. Figures 2a-2c depict several walking droplet experiments, while Figure 2d illustrates samples from the granular intruder experiment.

Our algorithm accurately tracks more than 98 percent of frames in a wide spectrum of experimental settings, which creates very high-fidelity data (see Figure 3). Tracking failure results in an identity (ID) switch and can manifest in different forms; for instance, trackers may lose an already tracked object, confuse the initial object identities with other class identities, or fail to track the objects altogether. To compare our algorithm’s performance against other methods, we tested five SOTA models: StrongSORT [5], Observation-Centric (OC)-SORT [4], Deep OC-SORT [9], BoT-SORT [1], and ByteTrack [11]. Surprisingly, all of these methods fail for multiple droplet experiments and make ID switches (see Figure 4).

![<strong>Figure 4.</strong> Frame-by-frame identity (ID) switching and new false assignments in previous state-of-the-art models. The top row depicts initial ID assignments and the bottom row shows ID assignments in a later frame. <strong>4a.</strong> StrongSORT. <strong>4b.</strong> Observation-Centric (OC)-SORT. <strong>4c.</strong> Deep OC-SORT. <strong>4d.</strong> <span style="font-family:'Courier New'">BoT-SORT</span>. <strong>4e.</strong> <span style="font-family:'Courier New'">ByteTrack</span>. Figure adapted from [8].](/media/gcxfhxxj/figure4.jpg)

General computer vision applications utilize a handful of standard performance metrics—such as multi-object tracking accuracy, multiple object tracking precision, and the number of ID switches—with some given tolerance. However, dynamicists typically seek to identify the trajectories of multiple objects with absolute accuracy; the tracker must therefore be completely free of ID switches, as even a single switch leads to false trajectories. As such, trajectories from these SOTA models may not always be useful. Our model is free of ID switches and hence more robust for dynamicists — especially when attempting to infer the underlying dynamics.

As an illustrative sample, consider the double pendulum: a canonical example of a chaotic system. We use MATLAB’s ode45 to numerically simulate the pendulum, which yields a ground truth for the tracking algorithm (see Figure 5). We record the simulation as a 20-second video with a frame rate of 60 hertz, where all of the dynamical information is hidden. For an additional challenge, we give the nodes and arms of the pendulum a light gray color that is difficult to see — especially as a moving picture. We then train the algorithm with ten frames of the experiment video, which contains a total of 1,200 frames. Doing so reveals close agreement between the ground truth and the tracking algorithm. As a next step, users could potentially employ sparse identification methods like SINDy [2, 7] to discover the differential equations that govern the dynamics. Concurrent utilization of these methods could potentially automate a workflow that begins with empirical observations and ends with a dynamical systems model.

Our tracking algorithm reliably reduces the number of required human hours to gather tracking data in dynamics problems. Dynamicists can subsequently spend that time on more enjoyable tasks, such as developing predictive interpretable models, analyzing bifurcations, or proving the existence of interesting topological properties of phase space. Furthermore, students will have the chance to absorb far more dynamics training from research projects than they otherwise would by simply tracking objects on a frame-by-frame basis. Ultimately, this tracking framework has the potential to significantly improve the workflow of many projects and enable the accurate inference or characterization of the underlying dynamical system that generates the observations.

References

[1] Aharon, N., Orfaig, R., & Bobrovsky, B.-Z. (2022). BoT-SORT: Robust associations multi-pedestrian tracking. Preprint, arXiv:2206.14651.

[2] Brunton, S.L., Proctor, J.L., & Kutz, J.N. (2016). Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci., 113(15), 3932-3937.

[3] Bush, J.W.M. (2010). Quantum mechanics writ large. Proc. Natl. Acad. Sci., 107(41), 17455-17456.

[4] Cao, J., Pang, J., Weng, X., Khirodkar, R., & Kitani, K. (2023). Observation-centric SORT: Rethinking SORT for robust multi-object tracking. Preprint, arXiv:2203.14360.

[5] Du, Y., Zhao, Z., Song, Y., Zhao, Y., Su, F., Gong, T., & Meng, H. (2022). StrongSORT: Make DeepSORT great again. Preprint, arXiv:2202.13514.

[6] Goodman, R.H., Rahman, A., Bellanich, M.J., & Morrison, C.N. (2015). A mechanical analog of the two-bounce resonance of solitary waves: Modeling and experiment. Chaos, 25(4), 043109.

[7] Kaheman, K., Kutz, J.N., & Brunton, S.L. (2020). SINDy-PI: A robust algorithm for parallel implicit sparse identification of nonlinear dynamics. Proc. R. Soc. A, 476(2242), 20200279.

[8] Kara, E., Zhang, G., Williams, J.J., Ferrandez-Quinto, G., Rhoden, L.J., Kim, M., … Rahman, A. (2023). Deep learning based object tracking in walking droplet and granular intruder experiments. J. Real-Time Image Process., 20, 86.

[9] Maggiolino, G., Ahmad, A., Cao, J., & Kitani, K. (2023). Deep OC-SORT: Multi-pedestrian tracking by adaptive re-identification. Preprint, arXiv:230211813.

[10] Shaffer, I., & Abaid, N. (2020). Transfer entropy analysis of interactions between bats using position and echolocation data. Entropy, 22(10), 1176.

[11] Zhang, Y., Sun, P., Jiang, Y., Yu, D., Weng, F., Yuan, Z., … Wang, X. (2022). ByteTrack: Multi-object tracking by associating every detection box. In Computer vision – ECCV 2022 (pp. 1-21). Tel Aviv, Israel: Springer.

About the Authors

Aminur Rahman

Instructor, University of Washington

Aminur Rahman is an acting instructor in the Department of Applied Mathematics at the University of Washington and a postdoctoral researcher in the AI Institute in Dynamic Systems. His research involves formulating mechanistic models of real-world phenomena and analyzing them via dynamical systems theory, numerical methods, and data-driven techniques.

Erdi Kara

Assistant Professor, Spelman College

Erdi Kara is an assistant professor in the Department of Mathematics at Spelman College. His research focuses on physics-guided computational modeling, which encompasses topics such as graph neural networks, physics-informed machine learning, and operator learning.

J. Nathan Kutz

Professor, University of Washington

J. Nathan Kutz is the Robert Bolles and Yasuko Endo Professor of Applied Mathematics and Electrical and Computer Engineering at the University of Washington, where he works at the intersection of data analysis and dynamical systems. He is also director of the AI Institute in Dynamic Systems.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.